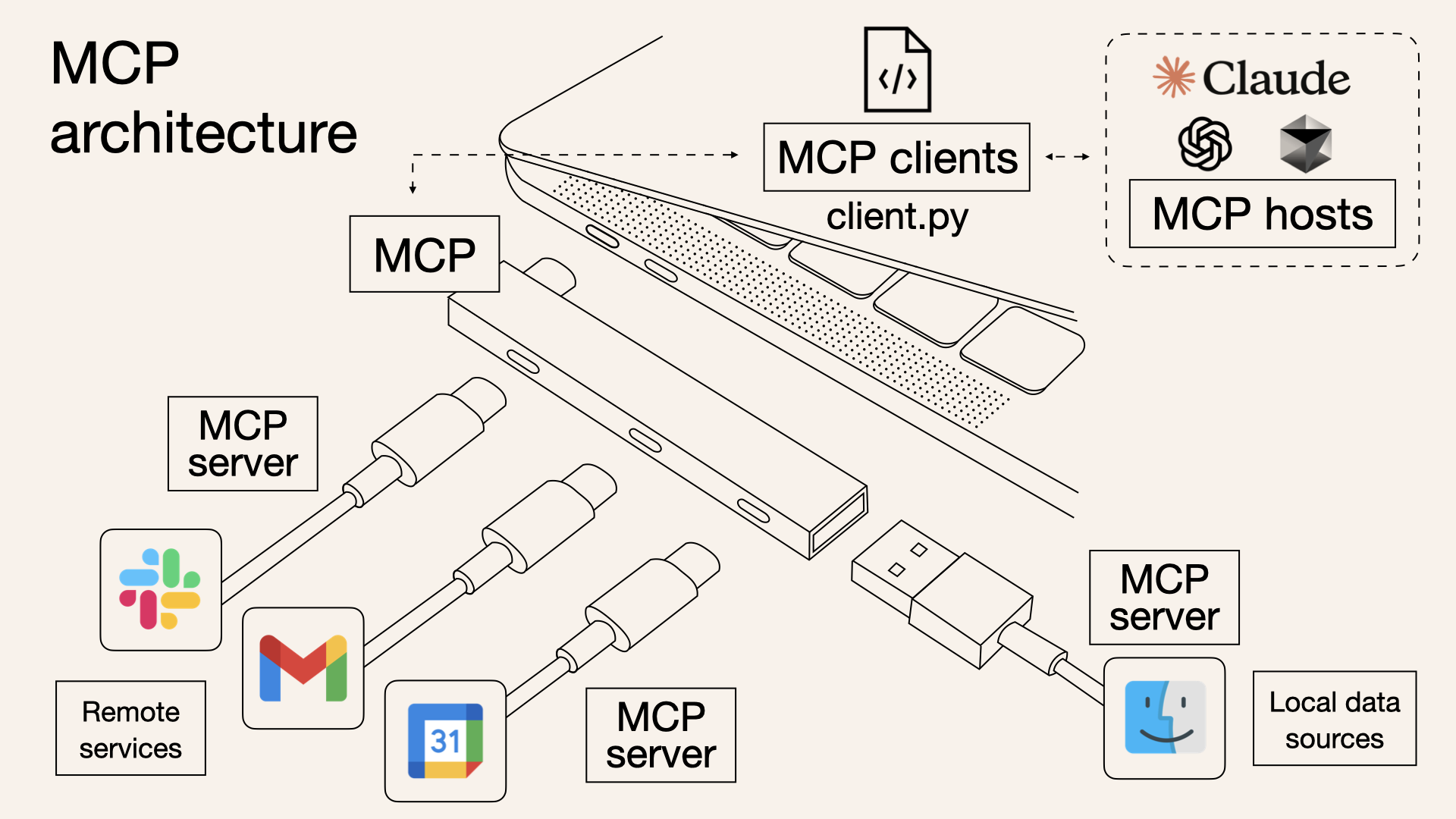

The Model Context Protocol (MCP), a recently developed open protocol, aims to standardise how apps give context to Large Language Models. Started by Anthropic as a project, MCP makes it easier for AI models, like Claude, ChatGPT and more, to interact with tools and data sources.

MCP is an open protocol, and even though it's still early days, it's gaining traction amongst companies and developers.

Why Choose MCP?

MCP at its roots helps developers build agents and complex workflows on-top of LLMs (Large Language Models). When building out applications that utilise LLMs, you will frequently find the need to integrate with data and tools, to work from and provide context.

It provides;

- An ever-growing list of pre-built integrations that an LLM of your choice can directly plug into.

- The flexibility to switch between LLM Providers and vendors.

- Best practices for securing your data within your infrastructure.

MCP is best visualised as a USB dock for your laptop. With a USB hub or dock, it's a standardised way to insert a drive, device, network card and much more. So keeping that in mind, MCP is simply a standardised plug-and-play system for interacting with and connecting services to your AI solutions, with some additional benefits.

Issues Solved by MCP

- Standardisation and Interoperability: Before MCP, integrating LLMs with various tools required custom solutions for each combination, leading to inefficiencies. MCP provides a standardised protocol, simplifying these integrations and promoting interoperability across different systems.

- Efficient Context Management: LLMs often struggle with maintaining context across interactions, which can affect their performance in complex tasks. MCP offers a structured approach to manage and share context, enhancing the coherence and effectiveness of AI applications.

- Scalability: Custom integrations can be difficult to scale as the number of tools and data sources grows. MCP's standardised approach allows for more scalable and maintainable integrations, reducing the complexity associated with expanding AI capabilities.

- Security and Access Control: MCP includes features for robust access controls, permissions, and audit trails, ensuring that integrations maintain high security standards while interacting with external systems.

Why MCP Matters

If you're an avid user of AI tools and software, you may have come across issues where limited context windows, hallucinations, and context drift become more and more glaring.

Context Drift: Where data changes over time, causing AI to become less accurate over time.

Hallucinations: Generation of content that's factually incorrect

Context Windows: The amount of text, measure in tokens.

Limited Context Windows

Most LLMs like GPT, Claude have finite context lengths. After a certain limit of tokens, the model begins to forget earlier information. Managing context efficiently is critical in AI applications. When the limit of these context windows is hit, you as a user or your application will begin to obtain inconsistent responses as the conversation grows longer.

Context Drift & Hallucinations

Traditional API-based conversations/ interactions are typically stateless, meaning context (each conversation) must be explicitly passed with each request. As you can guess, this becomes incredibly hard to scale unless you're an owner of a sizeable GPU farm or an endless wallet. The other side of this is its inherently inefficient and prone to error.

Context drift itself occurs when your AI tool/ LLM loses track of earlier information in a conversation, resulting in confusion, irrelevant output and further hallucination.

Let's say you were booking a holiday through an AI assistant. You chat about the best places to go, hotel choices and more. You settle on Benidorm (Not any longer on my list of top destinations based on previous...) as your choice of location early in the conversation. Great, you now have it all ready to go at the end of the conversation. AI then creates your summary just before you go to pay, but states you're now going to Paris. This is more than likely down to Context Drift and the Context Window limit being exceeded causing these issues.

The Benefits of MCP

Model Context Protocol directly addresses the challenges as detailed.

- Efficiency

- MCP provides a standardised approach, reducing redundancy.

- By managing and referencing existing context, MCP saves computational resources and bandwidth, improving response speeds and scalability.

- Result: Instead of repeatedly sending large blocks of contextual data with each request, MCP allows client and model to exchange context references or ID's. This allows for a reduction in payload size and increased performance.

- Context Retention

- MCP allows both LLMs and clients to maintain and reference historical data seamlessly.

- Improved context retention allows conversations to remain coherent and relevant, even across long exchanges or multiple sessions.

- Result: For example, a chatbot using MCP can effortlessly handle ongoing cases, recalling previous conversations with customers/ users without requiring explicit reminders by the user. Creating a more natural user experience.

- Standardised Communication

- MCP creates a universal standard for context management and interactions between AI models and external tools/ services.

- The standardisation itself simplifies integration, reduces development overhead, and speeds up the adoption of AI capabilities.

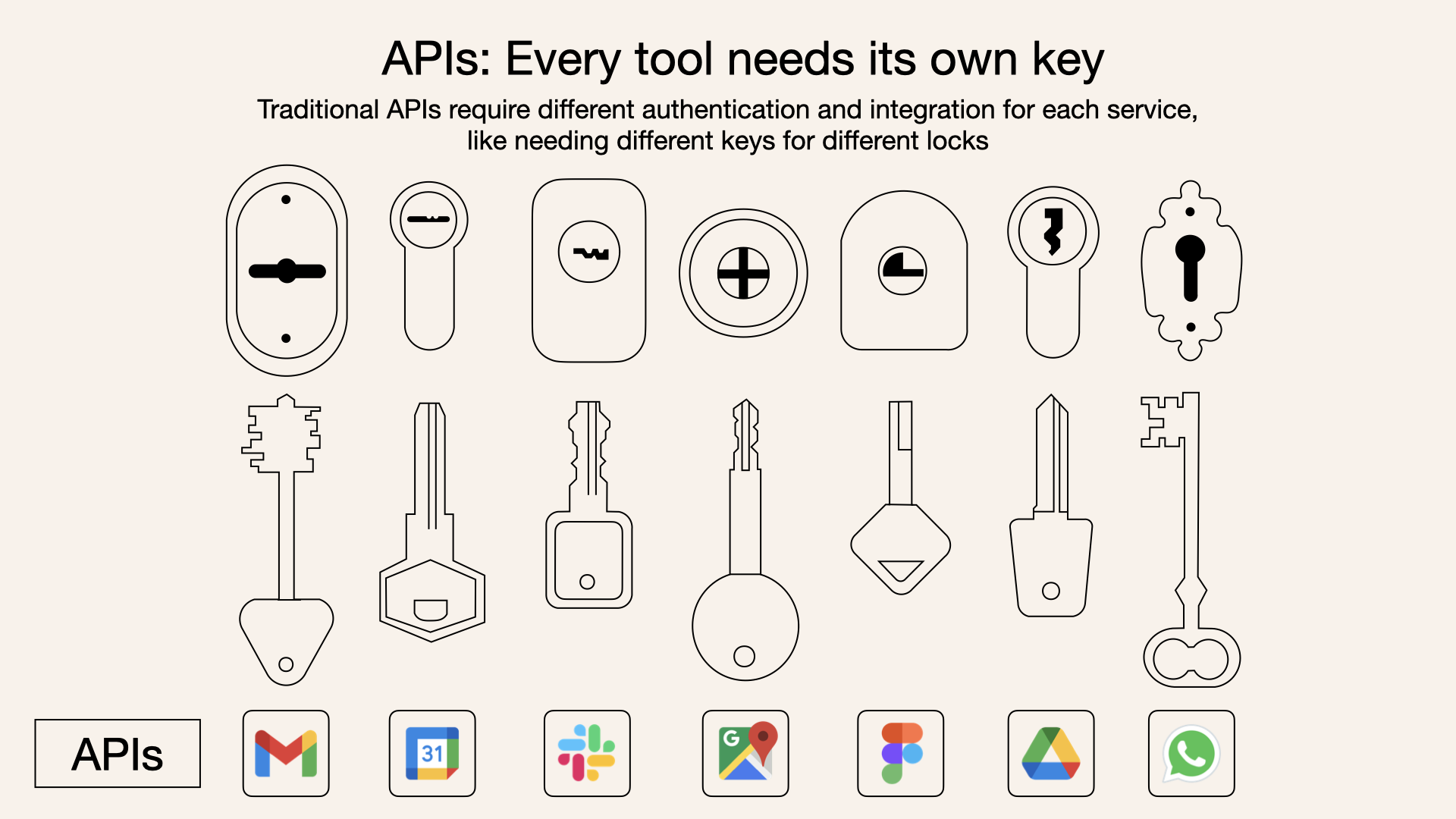

- Result: Instead of devs learning and having to adapt to dozens of different APIs, MCP offers a uniform and predictable way to integrate new tools and data sources. By offering this, it reduces complexity and boosts productivity.

MCP vs. API: Comparison

As we discuss this a bit more, you're probably thinking, "Why not just use an API"?

| Challenge | API | MCP |

|---|---|---|

| Context Retention | Poor; must explicitly manage state. | Good; built-in automatic state management. |

| Efficiency | Low; redundant data in every request | High; minimal redundancy through context references |

| Communications & Integrations | Complex; API specific documentation | Simple; Standardised, unified protocol. |

| Scalability | Difficult; extensive manual context management. | Easy; automated and streamlined. |

| Risk of Hallucination/ Drift | High, due to poor context management. | Lower, due to consistent and structured context handling. |

- Single Protocol: MCP as a Standardised "Connector" MCP serves as a standardised "connector," providing access to multiple tools and services, rather than just one.

- Dynamic Discovery with MCP: MCP lets AI models dynamically discover and interact with tools, needing no hard-coded knowledge of each integration.

- Two-Way Communication with MCP (Stateful): MCP supports persistent, real-time two-way communication, similar to WebSockets. This allows the AI model to both retrieve information and trigger actions dynamically.

MCP Provides Real-Time, Two-Way Communication:

- Pull Data: LLM queries servers for context, such as checking your calendar.

- Trigger Actions: LLM instructs servers to take actions, such as rescheduling meetings or sending emails.

As you may already know, when working with API's, each service requires their own authentication methods, custom coding for integration, has different schema and more. MCP resolved a lot of this, resulting in a reduced burden on the developer having to understand the differences and time spent reading documentation, etc as MCP itself abstracts these complexities.

Future of MCP

MCP is constantly evolving, with several expected developments to shape MCP's future and progress AI communication standards. There is a public roadmap of these developments found here. Some of the future developments worth noting are listed below.

- Remote MCP Support

- Authentication and Authorisation.

- Service Discovery.

- Stateless Operations.

- SDK and Language Support

- Java and Kotlin SDK release.

- Server and Tooling Improvements

- Simplification surrounding the SDKs.

- Dockerised MCP Servers.

Resources and Further Reading

- Norah Sakal Blog - https://norahsakal.com/blog/mcp-vs-api-model-context-protocol-explained/

- Model Context Protocol documentation - https://modelcontextprotocol.io/introduction

Fin

I hope this post has helped increase your understanding around Model Context Protocol and the difference between it and APIs.

If you have questions please let me know in the comments.

Thank you for reading and go build!